About Us

Product Development

Data Services

July 24, 2025

5 mins read

At a recent Sheffield AI meetup, I gave a talk that aimed to unpack a big question: Is AI coming for your job?

Short answer? No. But not for the reasons you might think.

Rather than fall into the trap of hyperbole, I approached the topic by stepping back and asking a different question: How have the skills and resources around building software changed over time?

Whilst on the surface it may appear that AI is going to take my job, AI is just the latest in a long line of innovations that promised to “replace” developers. None of them have.

To understand where we are now, we need to look back.

Back in the 1990s there were fewer than 70 programming languages, compared to nearly 400 in today’s world, if we look at the programming languages that GitHub recognises. If we ignore what GitHub is prepared to recognise, this stat balloons to well over 2,000 programming languages today.

Whilst 70 may sound like a lot, there were only around ~10 languages that were truly mainstream.

I started programming in the late 1990s — with a 1,200-page book on Visual Basic 5. Back when there was no Stack Overflow., and Google wasn’t really a thing. Instead, resources came on quarterly CDs from MSDN, and. open source barely existed.

In that era, learning meant deep understanding. You couldn’t rely on copy-paste; you had to internalise core concepts, and fewer languages meant less choice, but it also meant you could genuinely master your tools.

We saw the rise of code-sharing sites like SourceForge, CodeProject, and Planet Source in the early 2000s – in a time where package managers rarely existed and before GitHub was born. By the mid-2000s, package managers (PEAR, PyPI, RubyGems, Maven, etc.) had really caught on which made code reuse practical.

It wasn't until 2008 that we saw the birth of GitHub and Stackoverflow which in this day and age are indispensable resources.

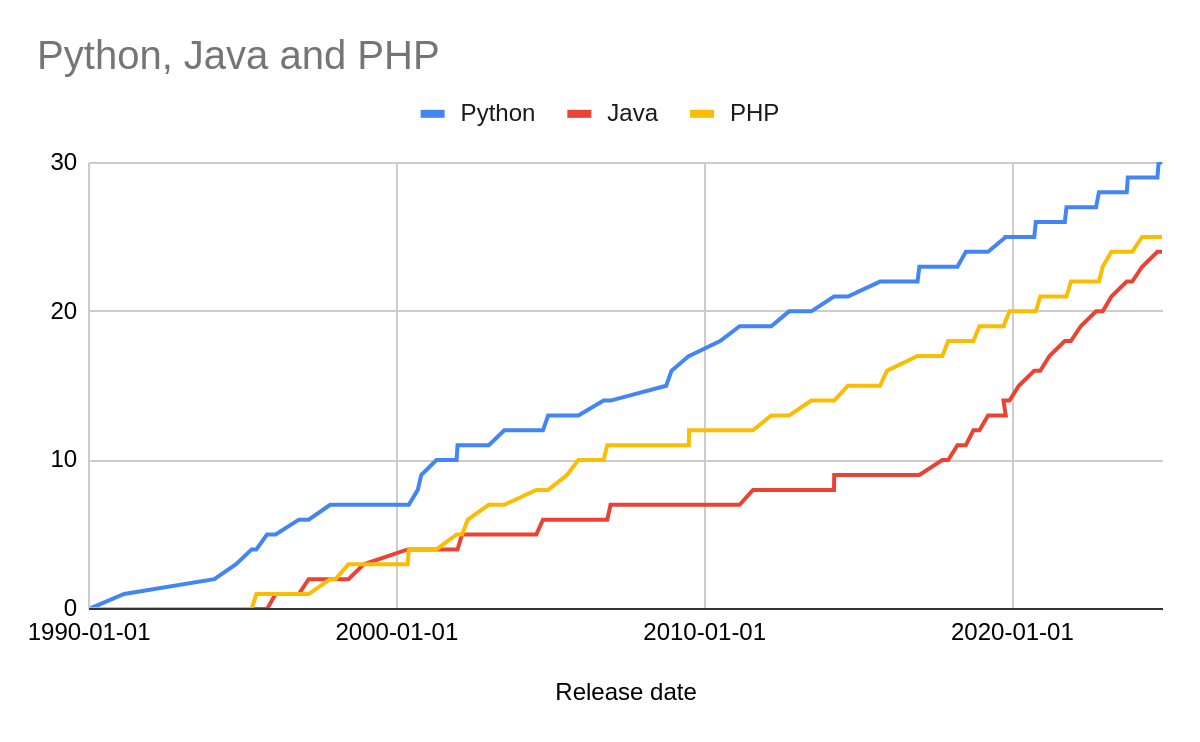

This rise of access to information is the catalyst for an explosion in new programming languages, frameworks, libraries and other innovations within the technology stack.

This also means that building a system is no longer just about writing the code from scratch. You need to understand the frameworks and libraries that your system is built upon, how it is packaged and hosted, etc. The range of technical things that you need to understand to build a system is growing.

All of this advancement leads to a key shift. Googling became a skill. But only if you knew what you were looking for. If you misunderstand how to frame a problem your search wouldn’t help. You needed contextual understanding to even ask the right questions.

By now, GitHub and Stack Overflow were standard tools. Facebook, Google, and others were open-sourcing major projects. The explosion of cloud, containers, CI/CD, ML frameworks, and more changed the game.

The rate of innovation for programming languages, frameworks and libraries also rapidly increased during this time.

Problem-solving became less about writing everything from scratch and more about knowing what tools to combine—and how. The rise of open source means that problem solving gets faster, and more feature-complete and comprehensive tooling in the open source can be reused wholesale.

The depth of things you “needed to know” grew exponentially.

Books couldn’t keep up.

Your job became more about navigating an ecosystem, not just knowing one language but being able to identify what’s the right tool for the job.

Today, tools like ChatGPT and GitHub Copilot can spit out working code with a half-decent prompt. The temptation is to think: Great, the machine can code—my job’s toast.

But it’s not that simple.

These tools are fantastic at producing something. AI can also be great at augmenting existing resources and allowing you to find information more easily. But whether the information or that something is actually correct, secure, or scalable? That’s still on you.

How well AI tooling does a job can still vary, and there is benefit in knowing the craft that you’re asking AI to do, as it allows you to apply a level of context, skill and vetting to the output.

We’ve seen realworld examples of where AI generated has been released into production without any safeguards or vetting:

We were tasked with integrating with a system. But this system would have issues when an apostrophe or other such characters would legitimately appear in the payload.It turns out that some of the code for this system we were integrating with, which was produced by a third-party contained ChatGPT generated code. The core issue? A SQL injection vulnerability from concatenated unsanitised inputs into query strings. Basic stuff, but easily missed if you don’t understand why input santisation and parameterised queries matter.

Another example? A production system produced by a third-party that we were asked to audit that stored passwords stored in plain text. I wish I were joking.

These aren’t just technical mistakes—they’re the consequence of failing to understand some of the fundamentals that go into building good software.

It’s partly because we’ve seen examples of where AI falls considerably short of the mark!

But another real challenge is that people still struggle to articulate what they actually want. Until AI can magically extract requirements and business intent from a client and convert it into production-ready, secure, scalable systems—your job is safe. Afterall, one of the hardest problems in building software is agreeing what to build.

Fundamentally, the way good developers add value has changed, the focus has shifted away from purely writing code and towards::

Choosing the right tools and trade-offs

Navigating nuance and complexity

Understanding the “why,” not just the “what”

And here’s the kicker: AI won’t replace you. But someone who knows how to use AI well might.

AI is a tool—just like Stack Overflow, just like Google. It can be powerful. It can be misused. The value you get from it depends on your ability to frame problems, apply context, and validate output.

Used correctly, AI makes you faster. Used wrong, it makes you dangerous.

The core skills—judgement, understanding, craftsmanship—still matter. They always have. They always will.